小试牛刀-搭建 grafana +prometheus 监控linux机器和mysql

拉取docker 镜像

docker pull prom/node-exporter docker pull prom/prometheus docker pull grafana/grafana

在装有mysql 的机器上

docker pull prom/mysqld-exporter docker run -d --restart=always --name mysqld-exporter -p 9104:9104 -e DATA_SOURCE_NAME="username:password@(192.168.10.xx:3306)/" prom/mysqld-exporter

然后在监控主机上

docker run -d -p 9100:9100 -v /proc:/host/proc:ro -v /sys:/host/sys:ro -v /:/rootfs:ro --net=host prom/node-exporter

创建目录,编辑prometheus配置文件

mkdir /opt/prometheus cd /opt/prometheus/ vim prometheus.yml

global:

scrape_interval: 60s

evaluation_interval: 60s

scrape_configs:

- job_name: prometheus

static_configs:

- targets: ['localhost:9090']

labels:

instance: prometheus

- job_name: linux

static_configs:

- targets: ['192.168.10.xxx:9100']

labels:

instance: localhost

- job_name: mysql

static_configs:

- targets: ['192.168.10.xx:9104']

labels:

instance: mysql

docker run -d -p 9090:9090 -v /opt/prometheus/prometheus.yml:/etc/prometheus/prometheus.yml prom/prometheus

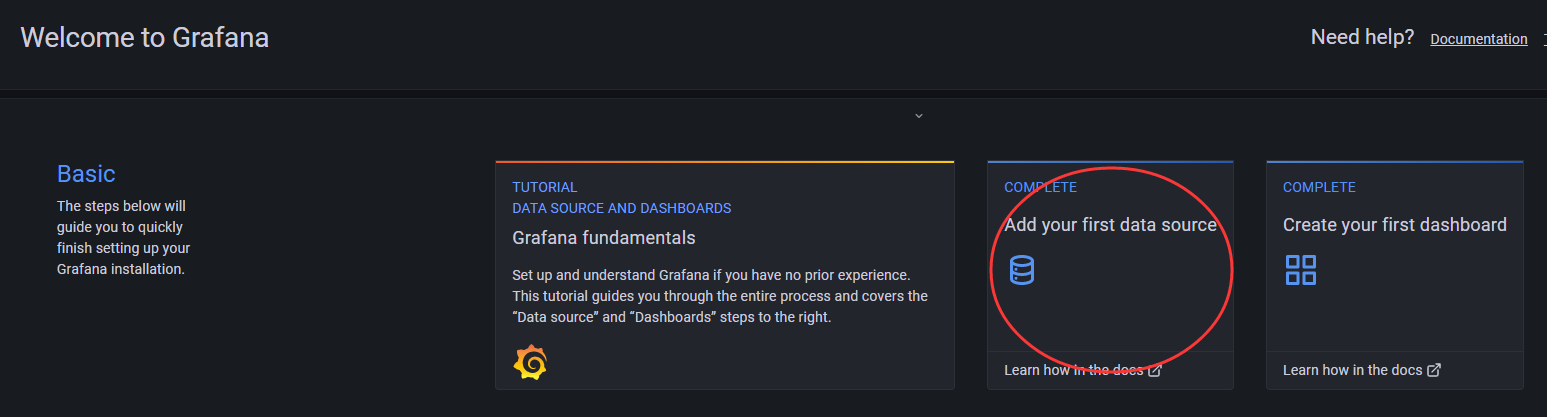

配置 grafana

mkdir /opt/grafana-storage chmod 777 -R /opt/grafana-storage

docker run -d -p 3000:3000 --name=grafana -v /opt/grafana-storage:/var/lib/grafana grafana/grafana

访问 192.168.10.xx:3000,用户名 admin 密码 admin,然后自己重置密码就可以了

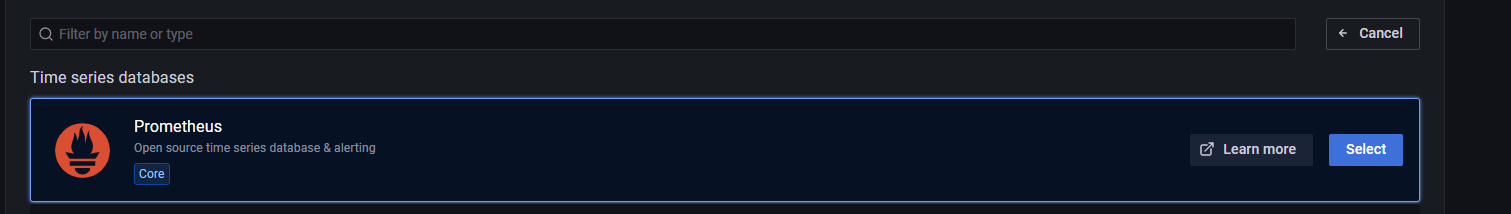

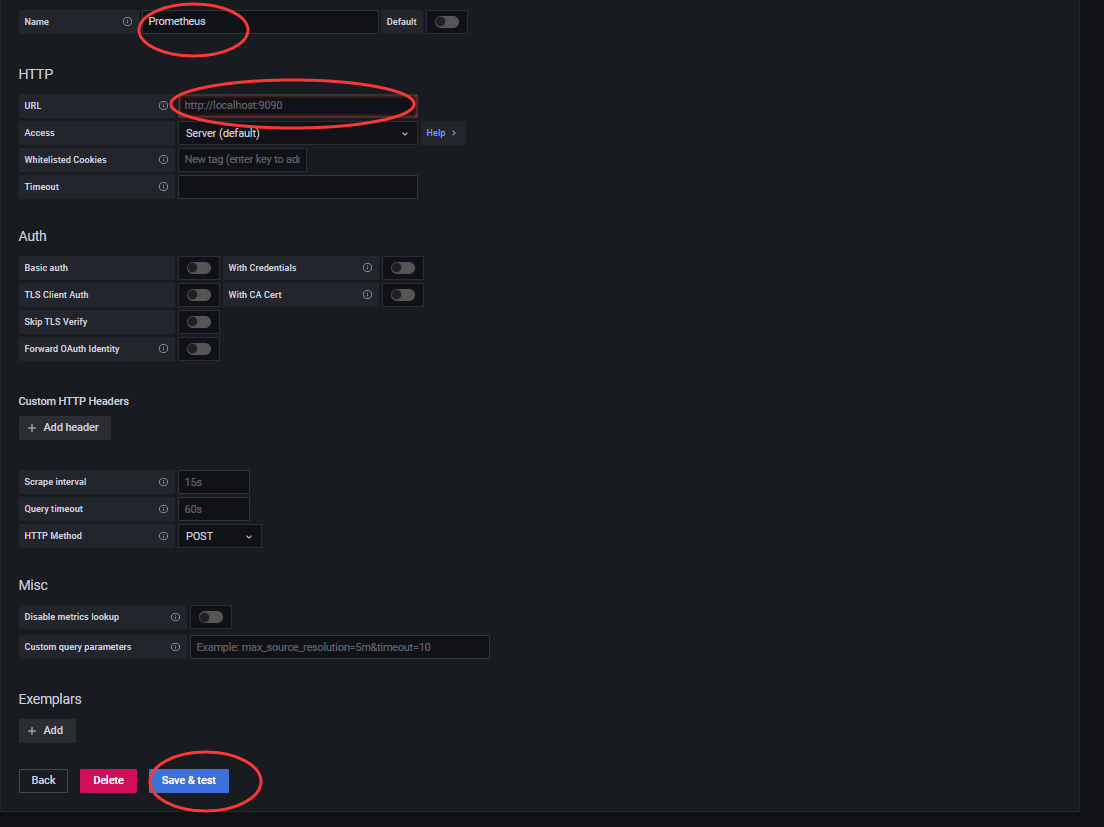

然后自己尝试添加数据源

选择类型,输入 prometheus 的地址,保存,出现绿色的成功提示就可以了

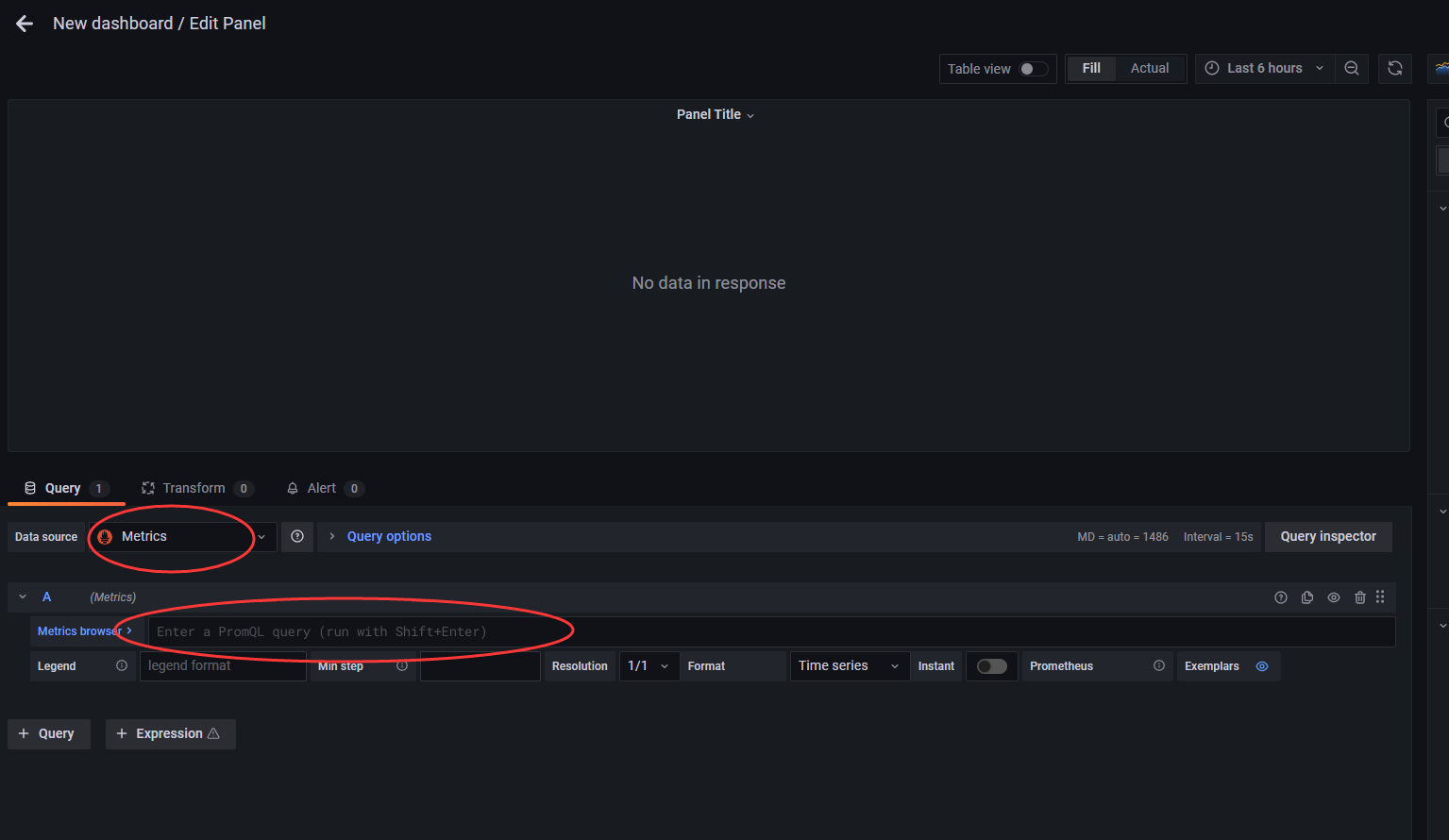

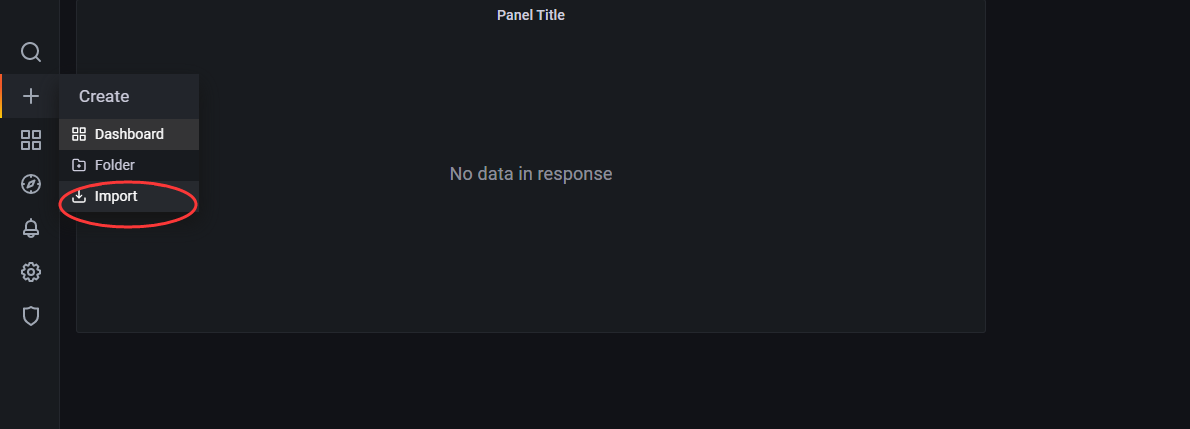

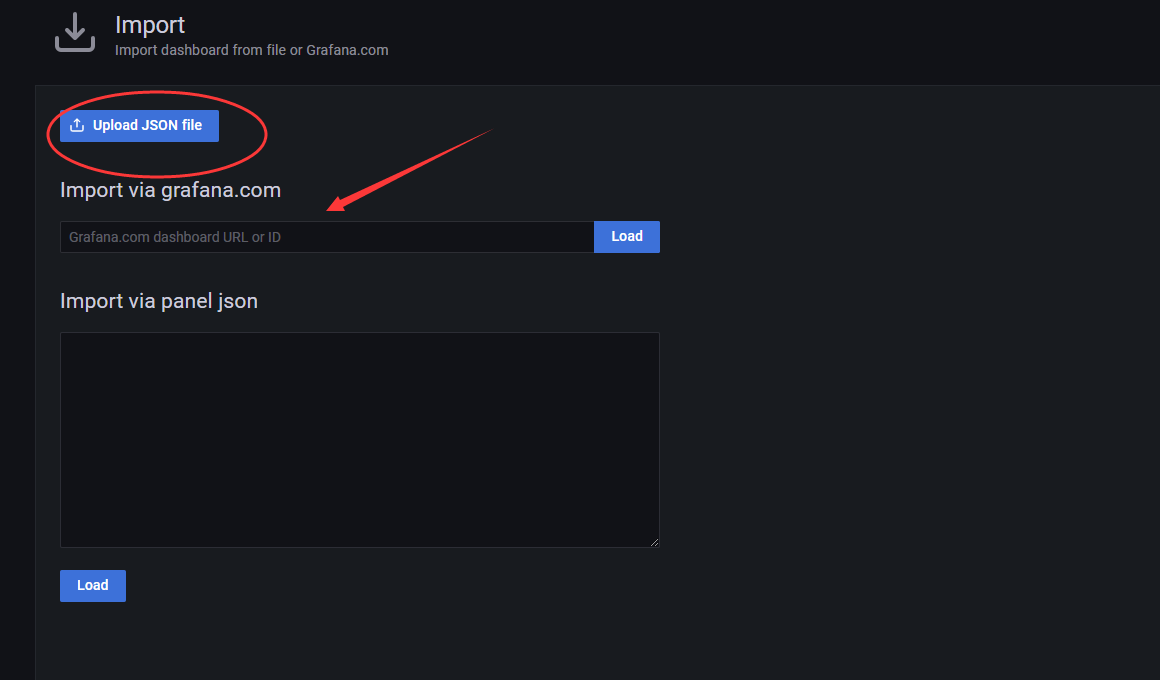

然后创建 dashboard

选择数据源,输入指标就可以展示图表了

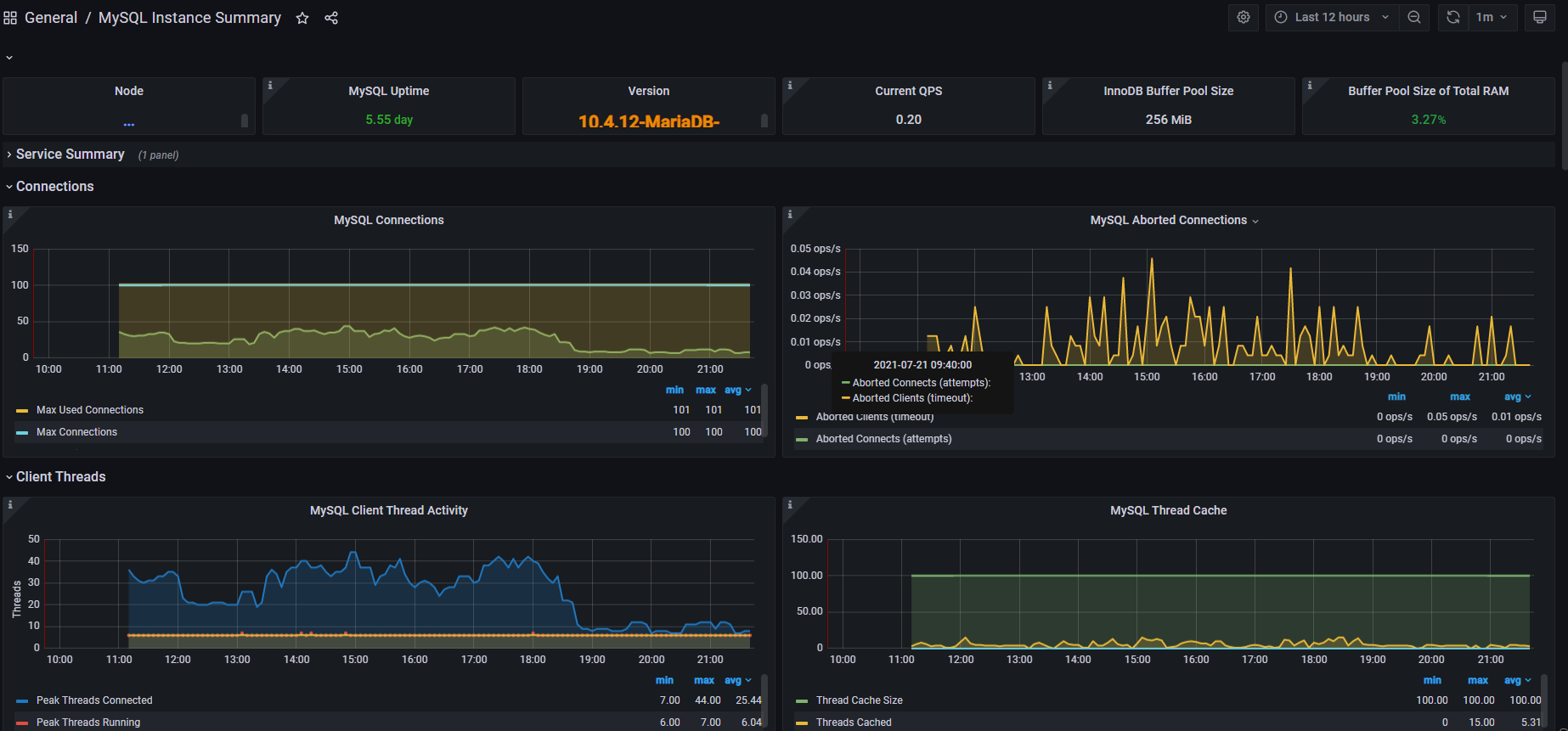

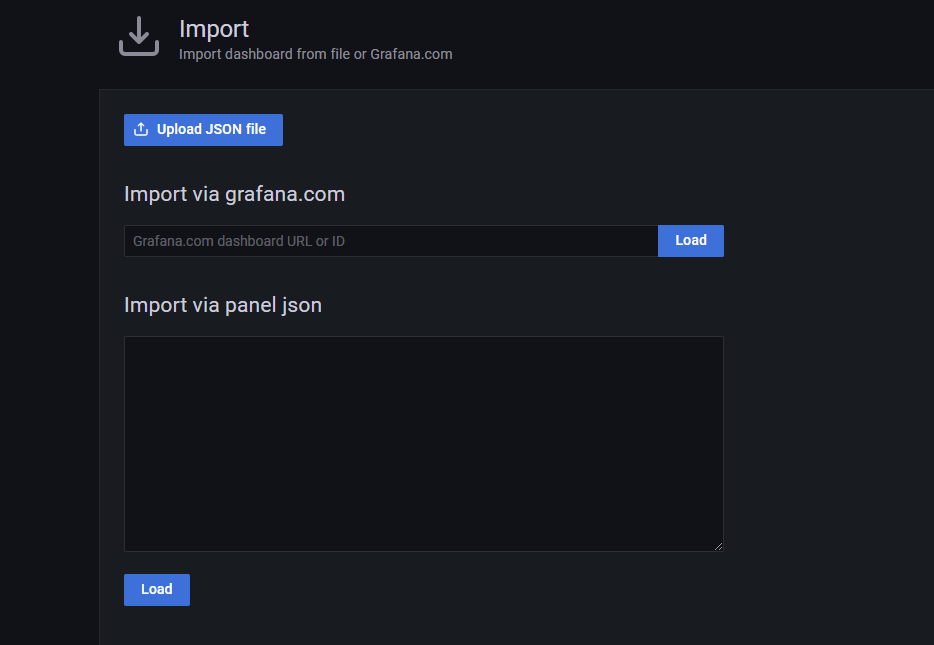

你也可以选择导入

以下两种方式都可以,比如你可以导入开源的 mysql 的监控(https://github.com/percona/grafana-dashboards/blob/PMM-2.0/dashboards/MySQL_Instances_Overview.json)

导入后展示如下

如果导入的dashboard 缺少插件,你可以去官网下载zip,然后解压放在 plugins目录下重启就可以了,当然你也可以在线命令安装,示例:(https://grafana.com/grafana/plugins/digiapulssi-breadcrumb-panel/?tab=installation)

grafana-cli plugins install digiapulssi-breadcrumb-panel

配置 elk +grafana监控nginx

首先nginx 日志要格式化成json 格式

nginx.conf 修改下日志格式

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format main '{"@timestamp":"$time_iso8601",'

'"@source":"$server_addr",'

'"hostname":"$hostname",'

'"ip":"$remote_addr",'

'"client":"$remote_addr",'

'"request_method":"$request_method",'

'"scheme":"$scheme",'

'"domain":"$server_name",'

'"referer":"$http_referer",'

'"request":"$request_uri",'

'"args":"$args",'

'"size":$body_bytes_sent,'

'"status": $status,'

'"responsetime":$request_time,'

'"upstreamtime":"$upstream_response_time",'

'"upstreamaddr":"$upstream_addr",'

'"http_user_agent":"$http_user_agent",'

'"https":"$https"'

'}';

access_log /var/log/nginx/access.log main;

sendfile on;

#tcp_nopush on;

keepalive_timeout 65;

#gzip on;

include /etc/nginx/conf.d/*.conf;

}

然后我们 docker 下配置下 elk,参考我之前的文章也可以(https://blog.magicdu.cn/694.html)

docker pull elasticsearch:7.6.2 docker pull logstash:7.6.2 docker pull kibana:7.6.2

创建相关目录,注意data目录需要设置权限,否则elasticsearch会因为无权限访问而启动失败

mkdir -p /mysoft/elk/elasticsearch/data/ chmod -R 777 /mysoft/elk/elasticsearch/data/

创建一个存放logstash配置的目录(logstash-springboot.conf)并编写配置文件

mkdir -p /mysoft/elk/logstash

初期配置如下,等其他环境配置好,我们再修改

input {

file {

## 修改你环境nginx日志路径

path => "/var/logs/nginx/access.log"

ignore_older => 0

codec => json

}

}

output {

elasticsearch {

hosts => "es:9200"

index => "nginx-logstash-%{+YYYY.MM.dd}"

}

}

然后编写 docker-compose 文件,启动 elk环境,注意相关挂载目录,因为监控nginx ,所以 nginx 日志需要和logstash 映射下

version: '3'

services:

elasticsearch:

image: elasticsearch:7.6.2

container_name: elasticsearch

environment:

- "cluster.name=elasticsearch" #设置集群名称为elasticsearch

- "discovery.type=single-node" #以单一节点模式启动

- "ES_JAVA_OPTS=-Xms512m -Xmx512m" #设置使用jvm内存大小

volumes:

- /mysoft/elk/elasticsearch/plugins:/usr/share/elasticsearch/plugins #插件文件挂载

- /mysoft/elk/elasticsearch/data:/usr/share/elasticsearch/data #数据文件挂载

ports:

- 9200:9200

kibana:

image: kibana:7.6.2

container_name: kibana

links:

- elasticsearch:es #可以用es这个域名访问elasticsearch服务

depends_on:

- elasticsearch #kibana在elasticsearch启动之后再启动

environment:

- "elasticsearch.hosts=http://es:9200" #设置访问elasticsearch的地址

ports:

- 5601:5601

logstash:

image: logstash:7.6.2

container_name: logstash

volumes:

- /mysoft/elk/logstash/logstash-nginx.conf:/usr/share/logstash/pipeline/logstash.conf #挂载logstash的配置文件

- /root/nginx/logs/:/var/logs/nginx/

depends_on:

- elasticsearch #kibana在elasticsearch启动之后再启动

links:

- elasticsearch:es #可以用es这个域名访问elasticsearch服务

ports:

- 9205:9205

启动相关环境:

docker-compose up -d

如果需要调整logstash 内存,需要变更 容器内的配置文件/usr/share/logstash/config/vm.options,将内存参数调至你合适的内存即可

在logstash中安装json_lines插件

# 进入logstash容器 docker exec -it logstash /bin/bash # 进入bin目录 cd /bin/ # 安装插件 logstash-plugin install logstash-codec-json_lines # 退出容器 exit # 重启logstash服务 docker restart logstash

logstash 配置 geoip,因为需要统计nginx 的访问地区,所以需要geoip 数据库,登录注册以下网址就行,下载

https://www.maxmind.com/en/accounts/584275/geoip/downloads

然后映射或者拷贝到 logstash 容器中,修改logstash 配置文件,重启

input {

file {

## 修改你环境nginx日志路径

path => "/var/logs/nginx/access.log"

ignore_older => 0

codec => json

}

}

filter {

mutate {

convert => [ "status","integer" ]

convert => [ "size","integer" ]

convert => [ "upstreatime","float" ]

convert => ["[geoip][coordinates]", "float"]

remove_field => "message"

}

date {

match => [ "timestamp" ,"dd/MMM/YYYY:HH:mm:ss Z" ]

}

geoip {

source => "client" ##日志格式里的ip来源,这里是client这个字段(client":"$remote_addr")

target => "geoip"

database =>"/usr/share/GeoIP/GeoLite2-City.mmdb" ##### 下载GeoIP库

add_field => [ "[geoip][coordinates]", "%{[geoip][longitude]}" ]

add_field => [ "[geoip][coordinates]", "%{[geoip][latitude]}" ]

}

mutate {

remove_field => "timestamp"

}

if "_geoip_lookup_failure" in [tags] { drop { } } ### 如果解析的地址是内网IP geoip解析将会失败,会生成_geoip_lookup_failure字段,这段话的意思是如果内网地址 drop掉这个字段。

}

output {

elasticsearch {

hosts => "es:9200"

index => "nginx-logstash-%{+YYYY.MM.dd}"

}

}

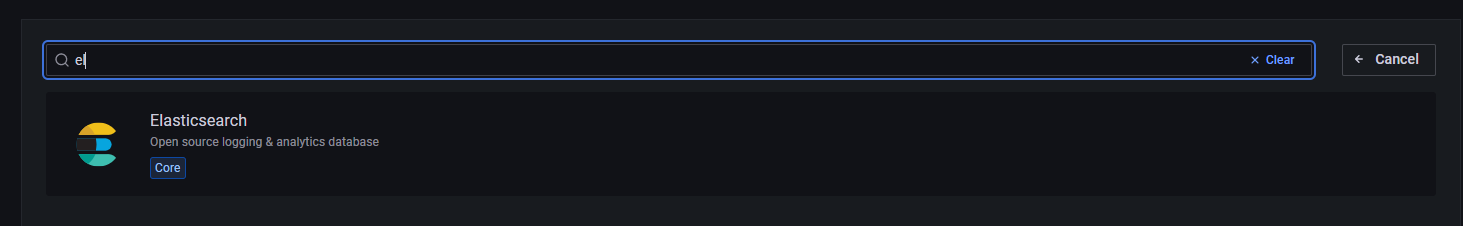

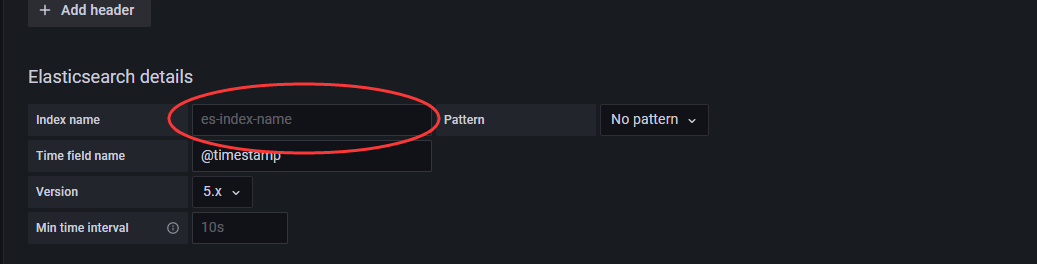

配置 grafana 数据源 为 elasticsearch

配置好索引名称

然后我们导入 nginx 的配置

去官网搜索 nginx logs https://grafana.com/grafana/dashboards

点击复制下id,然后导入就可以了,导入完如果缺少插件,就需要手动下载插件或者官网下载压缩包手动导入

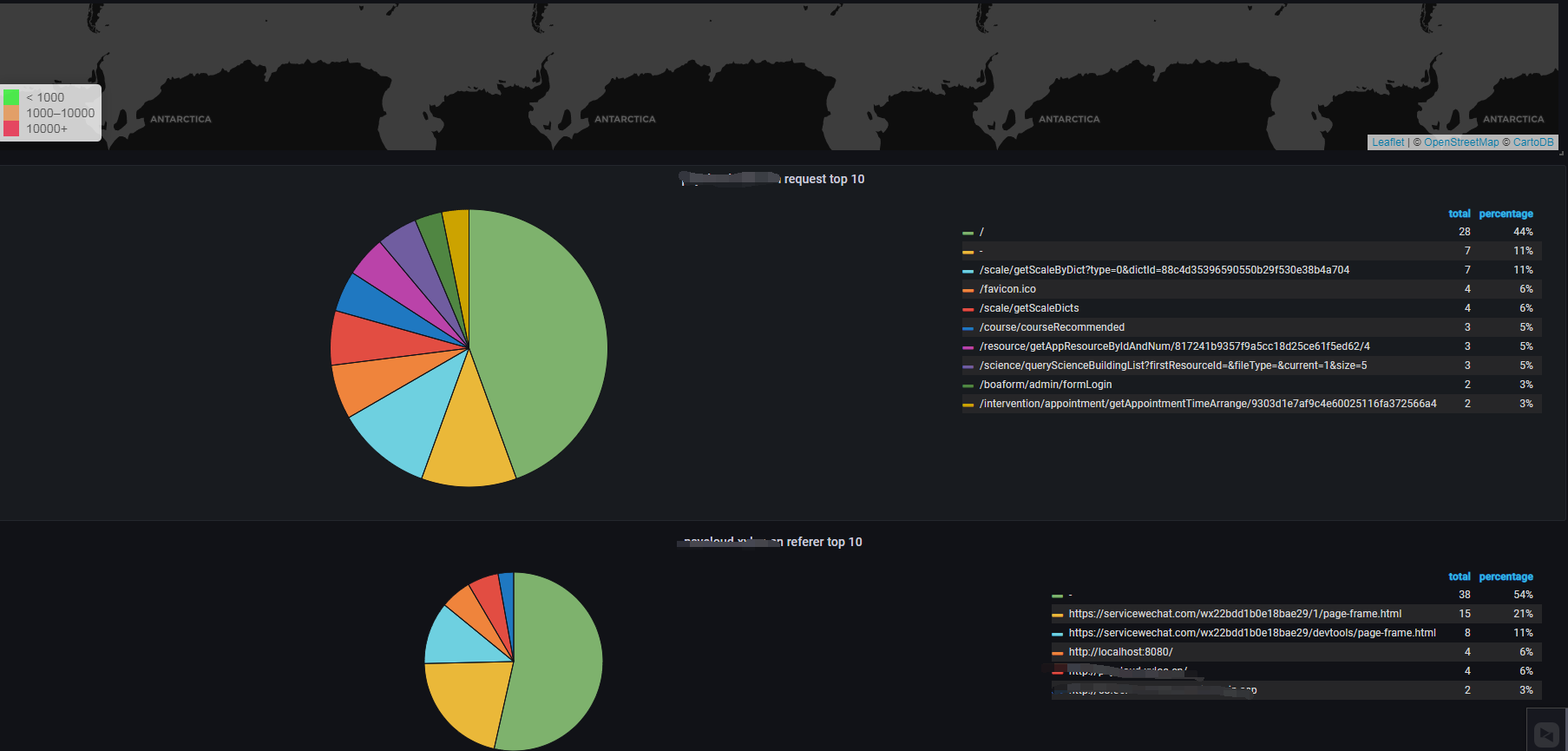

最终效果如下:

参考文章:https://blog.csdn.net/qq_25934401/article/details/83345144